Discover the History of the IQ Test: The Evolution and Impact of Intelligence Testing

IQ tests have been around for a while now, but have you wondered how they came about and why? Who created the IQ test, and what was its purpose? In this article, we explore the history of intelligence testing and how IQ tests evolved to be what we know today. We'll also cover some of the checkered history of IQ testing in the US, and the reforms that followed.

Back

16 mins read

Imagine a world where you could measure intelligence as easily and quickly as you measure height or weight. This was the world the early pioneers of IQ tests envisioned. However, the idea of human intelligence is so complex that it took centuries of research to develop the intelligence tests we have today.

The journey began in 1905 when French psychologists Alfred Binet and Théodore Simon created the first formal intelligence test, the Binet-Simon Test. Designed to identify students who needed additional educational support, this test laid the foundation for modern IQ testing.

Back then, IQ tests were lengthy, serious assessments that psychologists administered in professional settings. Fast forward to today, and you can hop online and take a quick IQ test from your couch.

But how did the concept of IQ testing originate? Who first decided to create a system for scoring and comparing human intelligence? Can they tell whether you are smart or not?

In this article, we explore the fascinating history of the IQ test, tracing their origins and development over time. From the early attempts to measure cognitive abilities to the sophisticated tools used today, we'll delve into how IQ testing has evolved and what it reveals about our understanding of human intelligence.

Read on as we get into some IQ test history!

You May Also Like Types of IQ Tests: A Comprehensive Guide to the Most Popular IQ Tests

Beginnings of Intelligence Testing: The Pioneers

Several key figures laid the foundations of intelligence testing, whose work in the late 19th and early 20th centuries set the stage for the development of formal IQ tests. These early pioneers, including Sir Francis Galton, Wilhelm Wundt, James McKeen Cattell, and Hermann Ebbinghaus, explored various aspects of human cognition and laid the groundwork for future advancements in measuring intelligence.

Sir Francis Galton: Pioneering Intelligence Measurement

One of the earliest pioneers in intelligence study was the 19th-century polymath Sir Francis Galton. Galton was fascinated by the concept of measuring human abilities and believed that mental traits, like physical traits, could be quantified and were heritable. His ideas were influenced by Charles Darwin’s theory of natural selection, suggesting that intelligence was a key trait passed down through generations.

Galton's early experiments included measuring reaction times and sensory acuity, which he believed were indicators of mental capacity. Although his methods were rudimentary and largely focused on physical attributes like reflexes, Galton's work laid the conceptual groundwork for future intelligence testing. He also proposed a scoring system for these tests, which was an early step toward developing more sophisticated methods of assessing human intelligence.

Wilhelm Wundt: Establishing the First Psychology Laboratory

Wilhelm Wundt, often regarded as the father of modern psychology, made significant contributions to studying human cognition that influenced later intelligence testing. In 1879, he established the first psychology laboratory at the University of Leipzig in Germany, where he conducted experiments on sensory perception, attention, and reaction time.

Wundt's work focused on understanding the structure of the mind through controlled experiments, laying the foundation for experimental psychology. His emphasis on objective measurement and standardized procedures was crucial for the development of intelligence testing, even though his direct contributions to IQ tests were limited.

James McKeen Cattell: Introducing the Concept of Mental Tests

James McKeen Cattell, a student of Wundt, was one of the first psychologists to use the term "mental test." In the late 1880s, Cattell began developing tests that measured basic cognitive functions such as memory, reaction time, and sensory acuity. He aimed to create a scientific approach to understanding individual differences in mental abilities.

Although Cattell's tests focused more on sensory and motor skills than complex cognitive functions, his work was instrumental in advancing the idea that mental abilities could be systematically measured. This concept directly influenced the development of later intelligence tests and laid the groundwork for subsequent models of cognitive abilities, including the Cattell-Horn-Carroll (CHC) theory.

The CHC theory, which integrates the ideas of fluid and crystallized intelligence first proposed by Raymond Cattell (not directly related to James McKeen Cattell), along with John L. Horn and John B. Carroll's research, has become one of the most widely accepted frameworks in understanding cognitive abilities. The theory’s influence is evident in modern intelligence tests like the Wechsler scales, which assess multiple cognitive domains aligned with the CHC model.

Hermann Ebbinghaus: Innovating with Memory and Learning Tests

Hermann Ebbinghaus, another key figure in the early history of psychology, is best known for his pioneering research on memory. In the late 19th century, Ebbinghaus developed experimental methods to study how memory works, including using nonsense syllables to measure learning and forgetting over time.

His research demonstrated that cognitive functions could be systematically studied and quantified, a significant departure from the introspective methods commonly used at the time. Ebbinghaus also developed one of the first intelligence tests that went beyond sensory measurement, focusing instead on higher cognitive processes such as memory and comprehension. His work laid the groundwork for more comprehensive intelligence tests that would emerge in the early 20th century.

The First Modern IQ Test: Binet-Simon Scale

While Sir Francis Galton planted the seeds, the real breakthrough in intelligence testing came from the work of French psychologist Alfred Binet and his colleague Théodore Simon.

In 1905, the French government commissioned Binet and Simon to develop a method for identifying students who may require additional educational assistance due to learning disabilities. Their solution was the Binet-Simon Scale, widely regarded as the first modern intelligence test.

The groundbreaking aspect of this test was its focus on measuring a child's mental age rather than merely their chronological age. The test could determine a child's cognitive development level by presenting a series of age-specific tasks.

In 1912, German psychologist William Stern expanded on this concept by coining the term "Intelligence Quotient" (IQ). He introduced a formula for calculating IQ, which involved dividing an individual's mental age by chronological age and multiplying by 100. This method of scoring provided a standardized way to compare cognitive abilities across different age groups, and it was incorporated into various intelligence tests, including the Stanford-Binet Intelligence Scale.

For example, if a 10-year-old child performed at the level expected of a typical 8-year-old, their Intelligence Quotient score would be calculated as (8/10) x 100 = 80, indicating below-average cognitive ability for their age group. This approach allowed for better educational placement and support tailored to each child's needs.

Early IQ Testing in the USA: Stanford-Binet IQ Tests

The idea of intelligence testing quickly spread from Europe to the United States, where an American psychologist from Stanford University named Lewis Terman played a pivotal role in its adoption. Building on the work of Alfred Binet and Théodore Simon, and the concept of the Intelligence Quotient developed by William Stern, Terman undertook an extensive project to adapt and standardize the test for American populations.

The result was the Stanford-Binet Intelligence Scale, first published in 1916. Terman didn't just translate the Binet-Simon Scale; he introduced significant modifications, including a normative sample based on American children, which helped to standardize the test for the US population. This revised version became widely used in American schools and clinics, cementing the role of IQ tests in educational settings. The Stanford-Binet test also popularized the concept of IQ as a standardized measure of intelligence, which became foundational in psychology and education.

Educators saw these intelligence tests as a valuable tool to predict academic achievement and identify students who may require specialized support or enrichment programs. The US military used the test during World War I to screen recruits, further demonstrating its utility and contributing to its widespread acceptance.

As IQ testing gained traction in the early 20th century, its use expanded beyond identifying learning disabilities. The Stanford-Binet Intelligence Scale and other emerging IQ tests were utilized for various purposes, including admission to gifted programs, vocational or academic placement, and immigration policies. It also influenced the development of subsequent intelligence tests, including the Wechsler scales, which would later become standard tools in psychological assessment.

However, criticisms soon arose regarding the limitations of these IQ tests and their potential cultural biases. Concerns were raised that IQ scores could be heavily influenced by socioeconomic background, education quality, and cultural experiences rather than reflecting innate intellectual capabilities. Ethical concerns also emerged about the use of these tests in supporting eugenics policies and restrictive immigration laws.

Stick around; we'll discuss IQ testing drawbacks and reforms later in this article.

Role of the Army and World War I in IQ Testing

The development and popularization of IQ tests in the United States were significantly influenced by the military's needs during World War I. The US Army required a quick and efficient way to assess the intellectual capabilities of large numbers of recruits and assign them to suitable roles. This need led to the creation of the Army Alpha and Army Beta tests, which marked a pivotal moment in the history of intelligence testing.

Development of the Army Alpha and Beta Tests

In 1917, under the direction of psychologist Robert Yerkes, the American Psychological Association (APA) formed a committee to design intelligence tests that could be administered to new military recruits. The result was two distinct tests: the Army Alpha and the Army Beta.

The Army Alpha test was a written examination designed for literate English-speaking recruits. It included verbal analogies, arithmetic problems, and general knowledge questions. This test aimed to assess verbal ability, numerical ability, and the ability to follow directions, all of which are considered important for determining a recruit's potential roles within the military.

However, the Army recognized that many recruits were either illiterate or non-English speakers. To accommodate these individuals, the Army Beta test was developed as a nonverbal assessment. The test included picture-based questions and tasks such as maze solving and pattern recognition, allowing the Army to evaluate cognitive abilities without requiring language proficiency.

Impact on Military Operations and Beyond

The Army Alpha and Beta tests were administered to over 1.75 million recruits during World War I. These tests were among the first large-scale applications of intelligence testing, demonstrating the utility of such assessments in quickly evaluating cognitive abilities and assigning roles based on intellectual strengths.

While the primary purpose was to streamline the process of assigning military roles, the success of these tests had a profound impact beyond the military. Psychologists and educators recognized the potential of IQ tests for broader applications, including identifying students for specialized educational programs and assessing individuals for various occupational roles.

Post-War Expansion of IQ Testing

After the war, the widespread use of the Army Alpha and Beta tests contributed to the growing acceptance of IQ testing in civilian contexts. These tests highlighted the perceived value of standardized intelligence assessments, leading to their increased use in schools, businesses, and other institutions.

In the 1920s, IQ tests became tools for educational placement, identifying gifted students, and diagnosing intellectual disabilities. The military's adoption of IQ testing during World War I also laid the groundwork for future uses in various fields, including psychology, education, and employment.

However, it is important to note that these early tests were not without controversy. Critics raised concerns about cultural and socioeconomic biases in the tests, and the use of IQ scores to make significant decisions about individuals' futures sparked debates that continue to this day.

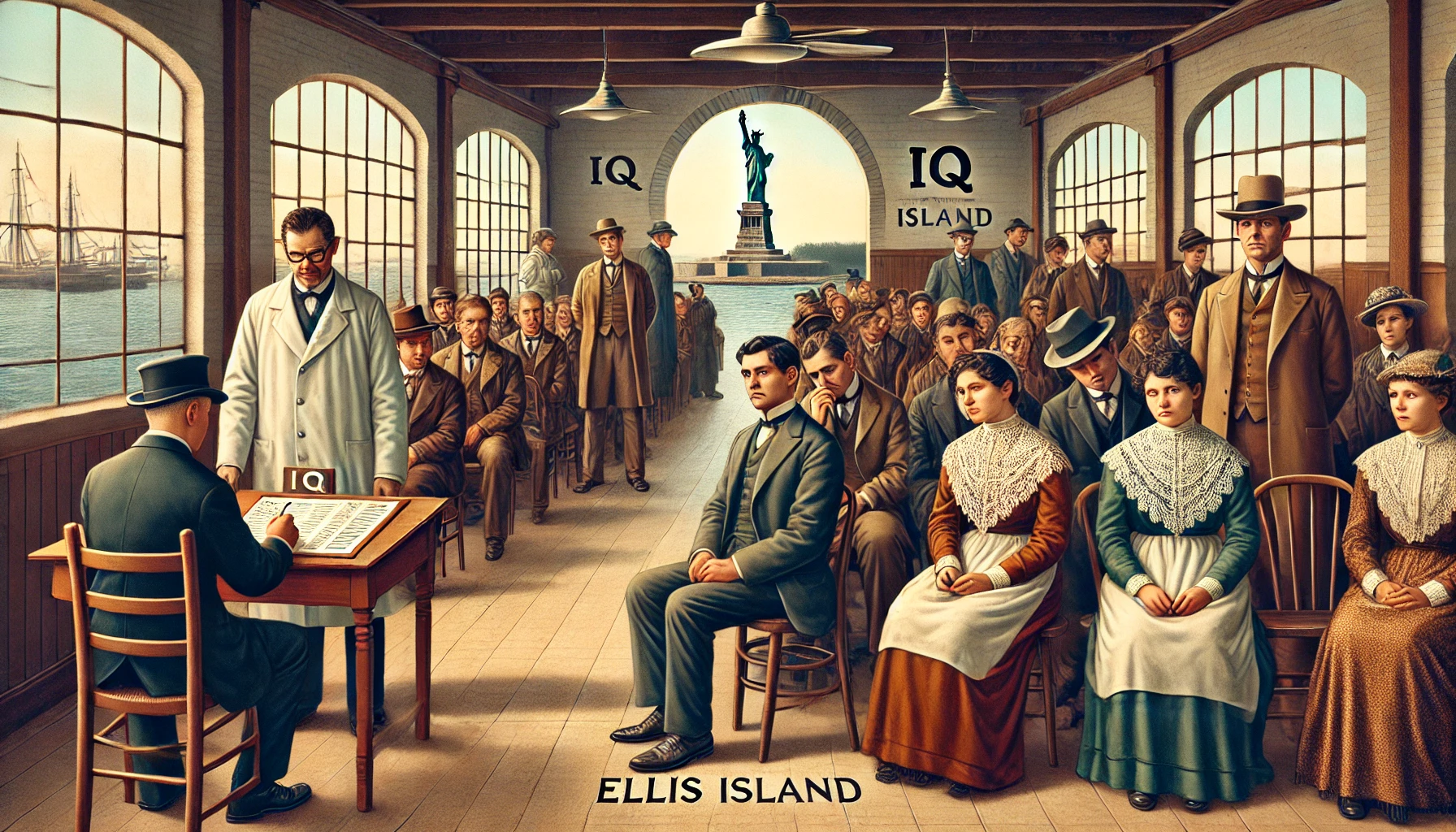

IQ Testing and Immigration: Ellis Island Controversy

In the early 20th century, the use of IQ tests extended beyond military applications and into the realm of immigration, particularly at Ellis Island, the primary entry point for immigrants to the United States. As the US experienced a surge in immigration, officials sought methods to evaluate the "fitness" of incoming individuals, often driven by the eugenics movement's emphasis on inherited traits.

To meet this need, IQ tests, adapted from the Army Alpha and Beta tests developed during World War I, were used to assess the intellectual capabilities of immigrants. The non-verbal Army Beta test was frequently used at Ellis Island since it could be given to people who did not speak or read English. Immigration screeners believed that the test could identify individuals deemed "feeble-minded" or intellectually unfit, who were then at risk of being denied entry into the United States.

Impact of IQ Testing on U.S. Immigration Policies

The results of these IQ tests had significant implications for US immigration policies. The data collected was used to support the passage of restrictive immigration laws, most notably the Immigration Act of 1924. This act aimed to limit the influx of immigrants from regions deemed to have lower average IQ scores, particularly targeting those from Southern and Eastern Europe.

These policies were rooted in the erroneous belief that intelligence was largely hereditary and that certain ethnic groups were inherently less intelligent. As a result, the use of IQ tests in this context became a tool for enforcing discriminatory practices and perpetuating xenophobic attitudes.

Ethical Concerns of Using IQ Tests for Immigration

The application of IQ testing in immigration was met with considerable criticism, particularly regarding the cultural and linguistic biases inherent in the tests. Many immigrants tested at Ellis Island came from non-English-speaking backgrounds, and the tests failed to account for these differences. The assumption that these tests could accurately measure an individual's intellectual capacity, regardless of cultural or educational background, was deeply flawed.

This misuse of IQ testing has since been widely discredited by contemporary researchers, who have criticized the way these tests were used to justify exclusionary and discriminatory immigration policies. The legacy of these practices is a reminder of the dangers of applying scientific tools without consideration of cultural and ethical contexts.

Legacy and Reflection

The history of IQ testing at Ellis Island and its role in shaping immigration policy is a complex and troubling chapter in the broader story of intelligence testing. It highlights potential misuse when scientific measures enforce social and political agendas. Today, the legacy of this period continues to inform debates about the validity and ethics of IQ testing, particularly in how these tests are used in policy-making and social contexts.

21st Century IQ Testing: Wechsler Intelligence Scales

The widespread use of the Stanford-Binet Intelligence Scales in the early 20th century marked a significant turning point in IQ testing. While the Binet-Simon Scale focused on children, psychologists like David Wechsler recognized the need for intelligence tests designed specifically for adults (that was better than the Army Beta and Alpha tests). This realization led to the development of the Wechsler-Bellevue Intelligence Scale in 1939, which later evolved into the Wechsler Adult Intelligence Scale (WAIS) in 1955.

The WAIS represented a shift towards a more comprehensive assessment of cognitive abilities. Rather than relying solely on a single IQ score, it measured various facets of intelligence, including:

- Verbal comprehension

- Perceptual reasoning

- Working memory

- Processing speed

This multidimensional approach to testing intelligence acknowledged the complexity of the human mind and the diverse ways cognitive abilities can manifest. It also paved the way for the creation of various intelligence tests tailored to different age groups, such as the

- Wechsler Intelligence Scale for Children (WISC) and

- Wechsler Preschool and Primary Scale of Intelligence (WPPSI).

By the mid-20th century, IQ testing had become a well-established practice in psychology and education. Numerous intelligence tests were available to assess different aspects of cognitive functioning across various developmental stages. Among these, the Wechsler scales have become the most widely used, surpassing the Stanford-Binet in prevalence due to their versatility and the detailed insights they provide through multiple index scores.

Now that we are at the start of the 21st century, the Wechsler scales are considered the gold standard in modern intelligence testing, used extensively in clinical, educational, and research settings worldwide.

Advances in the Wechsler Adult Intelligence Scale (WAIS-IV)

The Wechsler Adult Intelligence Scale has undergone several revisions to improve its accuracy and applicability. The latest version, the WAIS-IV, was published in 2008. The WAIS-IV introduced several important changes and enhancements, including:

- Updated Subtests: The WAIS-IV includes new subtests, such as visual puzzles and figure weights, to provide a more comprehensive assessment of cognitive abilities, such as visual puzzles and figure weights.

- Improved Scoring System: The scoring system was refined to better differentiate between various cognitive abilities and provide a more detailed profile of an individual’s strengths and weaknesses. The WAIS-IV offers four primary index scores: Verbal Comprehension, Perceptual Reasoning, Working Memory, and Processing Speed, alongside the Full Scale IQ (FSIQ).

- Normative Data: The WAIS-IV uses updated normative data, which more accurately reflects the current population. This ensures that the test results are more relevant and accurate for today’s diverse society.

- Enhanced Clinical Utility: The WAIS-IV offers improved tools for diagnosing learning disabilities, cognitive impairments, and other psychological conditions. It is widely used in clinical settings, educational environments, and research studies.

The WAIS-IV continues to be a cornerstone in psychological assessment, providing valuable insights into an individual's cognitive functioning and helping to guide interventions and support. The test has also been adapted for use in various cultures and languages worldwide, enhancing its applicability and accuracy across diverse populations.

By integrating these advancements, the WAIS-IV maintains its relevance and effectiveness in measuring adult intelligence, offering a robust framework for understanding the multifaceted nature of human intelligence.

Modern IQ Testing: Rise of Online Intelligence Tests

As intelligence testing continues to evolve, the available options are more diverse and accommodating than ever. With the advent of the internet and widespread access to digital tools, today, you can learn your IQ without ever leaving the comfort of your home with an online IQ test. These online assessments have become a popular way to gauge cognitive abilities in a fun and convenient way.

These tests aim to provide an instant snapshot of your mental agility through engaging challenges often presented in a game-like format. Whether you're seeking a lighthearted mental workout or a comprehensive professional evaluation, there's an assessment tailored to your needs. Online platforms offer a variety of test types, from simple brain teasers to more sophisticated tests based on established IQ scales.

On one end of the spectrum lie ultra-condensed brain teasers like the intriguing 3-question format of the Cognitive Reflection Test, perfect for a quick cognitive "checkup." On the other hand, there are in-depth professional assessments by licensed psychologists who provide multidimensional analyses of your cognitive abilities. In between, you'll find a buffet of online IQ tests and standardized assessments, ranging from entertaining puzzles to rigorous self-evaluations.

However, it's important to remember that online IQ tests can't replicate the depth and rigor of a formal professional evaluation. They may not be standardized or calibrated like traditional IQ tests, and the variability in quality and accuracy can lead to misleading results. Additionally, the lack of controlled environments can affect the reliability of these assessments.

Despite these limitations, online IQ tests offer valuable benefits. They provide an accessible and engaging way for individuals to explore their cognitive strengths and weaknesses, often at no cost and with immediate feedback.

Impact of Technology on Traditional IQ Testing

Beyond the rise of online IQ tests, technology has also significantly impacted the administration, scoring, and accessibility of traditional IQ tests. One of the most notable advancements is Computerized Adaptive Testing (CAT), which adjusts the difficulty of questions based on the test taker's real-time responses. This method allows for a more precise measurement of a person's cognitive abilities while reducing the number of items needed to determine an accurate score.

CAT has been integrated into various intelligence tests, including modern versions of the Wechsler scales and other widely used assessments. This technological innovation enhances the efficiency and accuracy of IQ testing, making it possible to tailor the test to each individual's ability level. Additionally, the use of computerized testing platforms has expanded access to IQ testing, allowing for more widespread and convenient testing in diverse settings, from schools to clinical environments.

Technology has also streamlined the scoring process, reducing the potential for human error and providing immediate results. This immediacy benefits test-takers and administrators, facilitating quicker decision-making in educational placements, clinical diagnoses, and research applications.

As technology advances, integrating AI and machine learning into IQ testing holds the promise of even more refined and personalized assessments. These developments are paving the way for a new era of intelligence testing that is more adaptive, accessible, and accurate than ever before.

The future of online intelligence testing is also promising, with advancements in AI and adaptive testing algorithms that personalize the testing experience based on the user’s performance. These innovations could lead to more accurate and meaningful assessments in the digital age.

Overall, while online IQ tests are useful for self-assessment and curiosity, they should be considered supplementary to professional evaluations rather than a replacement. They can be a fun and accessible way to get a general sense of your cognitive strengths, but they lack the comprehensive analysis provided by in-person assessments.

Criticisms and Reforms in IQ Testing Practices

Despite their widespread use, IQ tests have faced significant criticism over the years. Critics argue that IQ tests can be biased, culturally insensitive, and overly simplistic in measuring the complex nature of human intelligence.

Some of the main criticisms of IQ tests include cultural bias, socioeconomic bias, rising scores over time, the overemphasis on a single score, and ethical concerns. Let's examine each of these and the steps toward reform.

Cultural Bias

Early IQ tests were often biased against individuals from non-Western cultures or those with limited formal education. These biases were particularly evident in the use of IQ tests to label certain immigrant groups as intellectually inferior during the early 20th century, which influenced restrictive immigration policies.

Reforms have aimed to create more culturally fair assessments that minimize language and cultural knowledge biases. An example is the development of culture-fair tests like Raven's Progressive Matrices, designed to reduce cultural and linguistic influences on test performance.

Socioeconomic Bias

Critics argue that IQ scores can be influenced by a person's socioeconomic background, leading to unfair advantages or disadvantages. Factors such as access to education, quality of schooling, and family income levels can all impact an individual's test performance.

Modern tests aim to account for these differences by including more diverse and representative samples during test development. This approach seeks to ensure that IQ tests reflect an individual's cognitive abilities more accurately, regardless of socioeconomic status. Ongoing research into these biases highlights the need for continuous revisions and updates to IQ testing methodologies.

Flynn Effect: Rising IQ Scores Over Time

The Flynn Effect, named after researcher James R. Flynn, refers to the observed rise in average IQ scores over the 20th century. This phenomenon has been documented across many countries. It suggests that the average IQ has increased by about 3 points per decade. The Flynn Effect represents a representational change in IQ, not individuals working to raise their scores.

The implications of the Flynn Effect challenge the notion of static intelligence. It suggests that environmental factors—such as improved nutrition, education, and access to information—play a significant role in shaping cognitive abilities. This rise in IQ scores over time indicates that intelligence is not solely a fixed trait but can be influenced by societal changes and living conditions.

To account for the Flynn Effect, IQ tests have been periodically re-normed. This process involves adjusting the scoring system to ensure the average IQ score remains around 100. Without this re-norming, contemporary test-takers would score artificially higher on older test versions. The Flynn Effect highlights the importance of considering environmental factors in intelligence testing. It reinforces the need for continuous updates to ensure that intelligence tests remain accurate and relevant.

Overemphasis on a Single Score

Traditional IQ tests often rely on a single score to represent an individual's intelligence. This approach has been criticized for oversimplifying the complexity of human intelligence and how much IQ scores actually matter.

Modern approaches advocate for a more comprehensive assessment that considers multiple cognitive domains and provides a detailed profile of strengths and weaknesses. Additionally, alternative theories, such as Howard Gardner's theory of multiple intelligences and the concept of emotional intelligence (EQ), suggest that intelligence encompasses a broader range of abilities than those captured by traditional IQ tests.

Ethical Concerns

The use of IQ tests in making high-stakes decisions, such as educational placement or employment selection, has raised ethical concerns. Early in their history, IQ tests were used to support eugenic policies, including forced sterilization and immigration restrictions based on the erroneous belief in the heritability of intelligence.

Reforms have focused on ensuring that IQ tests are used responsibly and with other assessments to make well-rounded decisions. Professional organizations, like the American Psychological Association, have established guidelines and standards to promote the ethical use of IQ tests.

The Use of IQ Tests in Various Fields

These days, IQ tests are now being used in a variety of fields, including:

- Psychology: Psychologists use IQ tests to assess cognitive functioning, diagnose intellectual disabilities, and identify areas of cognitive strengths and weaknesses.

- Education: Educators use IQ tests to place students in appropriate educational programs, identify gifted and talented students, and develop individualized education plans (IEPs) for students with special needs.

- Employment: Some employers use IQ tests as part of their hiring processes to evaluate candidates' problem-solving abilities, critical thinking skills, and overall cognitive potential.

- Research: Researchers use IQ tests to study human cognition, explore the relationship between intelligence and various life outcomes, and investigate the genetic and environmental factors influencing intelligence.

Intelligence Is a Journey: Embrace It!

The history of IQ tests, from Sir Francis Galton's early experiments to the refined Stanford-Binet Intelligence Scales, illustrates our enduring quest to measure and understand human intelligence. Alfred Binet's pioneering work, commissioned by the French government, and the subsequent adaptations by American psychologists have provided us with tools to gauge cognitive abilities across various contexts.

It's crucial to remember that a numerical score does not solely define intelligence or potential.

Each person's intelligence is multifaceted, encompassing a range of mental abilities and cognitive processes. Whether your IQ score is high or low, average or genius, doesn't define your worth. Intelligence tests serve as one of many tools to understand our cognitive strengths and weaknesses.

The popularity of online IQ tests has made measuring intelligence more accessible, but these should be considered supplementary to professional evaluations. The true value of an IQ test lies in its ability to highlight areas for growth and development, guiding educational placement and personalized learning strategies.

IQ tests have a storied history, but their future holds even more promise. The continued refinement of tests like the Wechsler scales, which have become the most widely used tools for assessing intelligence, highlights our ongoing efforts to create more accurate and inclusive measures. Addressing cultural and socioeconomic biases can make these tests more representative of our diverse society.

Remember, intelligence is a journey, not a destination; embrace the opportunities to learn and grow, and let your curiosity lead you to new discoveries.

Ultimately, what truly matters is how we use our cognitive abilities to solve problems, create, build relationships, and contribute to our communities.

Return to Blog